More Fearmongering From the Cult of AI

30 September 2021 - 3:22 pm

Yesterday, 29th September 2021 the Times deluged Twitter with a sequence of tweets about Mo Gawdat, someone who they refer to as a “former Google supremo” (whatever that is), and a “Silicon Valley supergeek who believes we face an apocalyptic threat from AI”.

The work of Kurt Gödel and also Alan Turing has proven the “AI” that Mo Gawdat believes in is a fictional fantasy. Some things worth checking out on the topic are Gödel’s discovery called “The Incompleteness Theorem” (Wikipedia link), his book titled “On Formally Undecidable Propositions of “Principia Mathematica” and Related Systems” published in 1931 and Turing’s “Halting Problem” first published in 1936 (Wikipedia link).

In brief, Gödel’s proof for his Incompleteness Theorem says:

“Anything you can draw a circle around cannot explain itself without referring to something outside the circle – something you have to assume but cannot prove.”

This impacts all systems subject to the laws of logic. All closed systems depend on something outside the system. It is actually incredibly simple and is described in a book by Rudy Rucker titled “Infinity and the Mind” as follows:

- Someone introduces Gödel to a UTM, a machine that is supposed to be a Universal Truth Machine, capable of correctly answering any question at all.

- Gödel asks for the program and the circuit design of the UTM. The program may be complicated, but it can only be finitely long. Call the program P(UTM) for Program of the Universal Truth Machine.

- Smiling a little, Gödel writes out the following sentence: “The machine constructed on the basis of the program P(UTM) will never say that this sentence is true.” Call this sentence G for Gödel. Note that G is equivalent to: “UTM will never say G is true.”

- Now Gödel laughs his high laugh and asks UTM whether G is true or not.

- If UTM says G is true, then “UTM will never say G is true” is false. If “UTM will never say G is true” is false, then G is false (since G = “UTM will never say G is true”). So if UTM says G is true, then G is in fact false, and UTM has made a false statement. So UTM will never say that G is true, since UTM makes only true statements.

- We have established that UTM will never say G is true. So “UTM will never say G is true” is in fact a true statement. So G is true (since G = “UTM will never say G is true”).

- “I know a truth that UTM can never utter,” Gödel says. “I know that G is true. UTM is not truly universal.”

An even simpler way of approaching this is “The Liar’s Paradox”, which is the statement:

“I am lying.”

…which is self-contradictory, as if it is true then I’m not lying the statement is false, and if it is false then I am a liar which makes it true.

This simple logic demonstrates that any system, including computing systems that are touted as AI, or will be, cannot truly exist as independent self-learning systems capable of evaluating all things correctly as self-referential systems are inherently flawed and incomplete. Mo Gawdat’s idea they are “creating God” is entirely at odds with that incompleteness, as included within the list of qualities attributed to God, is perfection, often described as:

Omnipresent, Omniscient and Omnipotent.

Any system based on logic (which computers are, including the ones Google use for their “AI” projects) cannot fulfil any of those criteria, and clearly requires input from outside the system.

Alan Turing’s “Halting Problem” in computability theory is similar and equally demonstrates (as have others since) there are things that are “undecidable” and as such a system cannot exist that always correctly decides whether, for a given arbitrary program and input, the program halts when run with that input.

A brief explanation of the Halting Problem is as follows:

We have two computers…

Computer A is a maths computer. The input is a mathematical problem, and it always outputs the correct answer.

Computer C is a Draughts/Checkers computer. The input is the current state of the game board, and it always outputs the best move.

We can make these kinds of computers, they are solvable inputs that can be computed using deterministic systems.

Feeding computer A with an input for computer C will cause computer A to halt, as it cannot process a game board input, only maths problems.

Feeding computer C with an input for computer A will cause computer C to halt, as it cannot process maths problems, only the game input it was designed for.

We have a blueprint for computer A, which completely defines every aspect of how A works, including all logic and input/output systems.

Now we introduce computer H, which is a computer that can solve the halting problem. It works by taking the blueprint for a computer, and determining which inputs are suitable for it, and which are not, i.e. which will get processed and which will cause the system to halt.

We feed computer H with a blueprint, and an input to test. For example we feed it the blueprint for computer A, and a maths problem. H simulates the running of computer A from the blueprint supplied, and gives the simulated version of computer A a maths problem and determines if it halts, i.e. if the given blueprint can handle the given input.

If H was supplied the blueprint for A and a maths problem, we should get the output from H as “Not Halted”.

If we supplied H with the blueprint for A and a game board for C, we should get the output from H as “Halted” as A cannot process input for C.

We could do the same if we supplied H with the blueprint for C, and a game board input and we’d get “Not Halted”, and if we supplied H with the blueprint for C and a maths problem we’d get “Halted”.

That would solve the Halting Problem. The issue is can H really exist? The answer as Alan Turing proved is no, it is logically impossible.

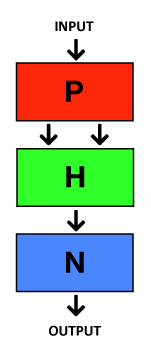

The proof is as follows. Let’s assume for a moment that H can exist and work in the way described. Let’s set things up with two more machines. Machine P is a photocopier. It takes one input and outputs two copies. Machine N is a negator, which means if it receives the input “Not Halted”, it halts, and if it receives the input “Halted” it outputs a tick.

We arrange these machines, along with H like this…

We can wrap all this up into a single machine we’ll call X. We have a blueprint for X that describes everything about how it works, including all logic and input/output systems. We can then feed X with it’s own blueprint. The possibilities are:

A)

- Blueprint X goes in

- P makes two copies and feeds them to H

- H tests to see if X can process its own blueprint as an input

- H determines yes and outputs “Not Halted”

- N negates that and halts

B)

- Blueprint X goes in

- P makes two copies and feeds them to H

- H tests to see if X can process its own blueprint as an input

- H determines no and outputs “Halted”

- N negates that and outputs a tick

As H is supposed to solve the Halting Problem for any input pair, in scenario A the test by H reported that X fed with itself would not halt, but X actually halted. In scenario B the test by H reported that X fed with itself would halt, but X output a tick. In both cases H was incorrect.

A common misunderstanding or objection to this is:

“H actually produced the correct output, it was N (the negator) that caused the answer to be wrong, so it is not H’s fault.”

The question is what happens if X is fed its own blueprint? If you just take H and feed it two copies of X’s blueprint and it answers it will halt, the only way to actually test that is to build X and feed it with it’s own blueprint. If H is the perfect solution to the Halting Problem and always give the correct answer, that should be correct. But if you do that, and what was just described is scenario B above, and that means H was wrong.

Another objection is “If N is what causes the problem, why not remove it?” but the goal here is to prove H cannot perfectly work. The machine X, that contains N was specifically designed to cause H to fail. We only need to demonstrate one such failure by H to conclude that a perfect Halting Problem solver (i.e. H) cannot exist.

Computers are much faster, smaller and look way cooler than they did in the 1930s, but the way they work in principle has not changed at all. This is basic information technology executing algorithms and manipulating symbols. If a computer can do 1 calculation per second or 100 trillion calculations a second, the way it works is the same. There is no sudden intelligence that spontaneously emerges if you just do calculations faster. The evangelists for AI, such as Elon Musk, Sam Harris etc. all talk about computers getting faster and faster and that is obviously true. But they and their fellow AI cult members believe (or pretend to) that transistor based logic circuits that need designing and programming by something “outside the circle” at some point in the future and for some unstated reason just become “intelligent”.

They never tell you how we go from fast binary calculations to self-improving intelligent machines that become “God”, just that it will happen and we should be afraid. Sound familiar?

Going back to the Times posts on Twitter quoting Mo Gawdat, some of the things said are truly laughable. Only the kind of uber-zealous disciple of AI would ever come out with such nonsense, but it seems like Mo fits the bill. The Times tweeted…

He says he glimpsed the apocalypse in a robot arm. Or rather, in a bunch of robot arms, all being developed together. An arm farm.

Mo Gawdat quoted by the Times – https://twitter.com/i/events/1443153917483835398

For a long time they were getting nowhere…

Then, one day, an arm picked up a yellow ball and showed it proudly to the camera

“And I suddenly realised,” he says, “this is really scary”

“We had those things for weeks. And they are doing what children will take two years to do. And then it hit me that they are children. But very, very fast children. They get smarter so quickly! And if they’re children? And they’re observing us? I’m sorry to say, we suck”

In other words, “Robot arm finally does what we’ve spent billions of dollars and millions of hours developing and programming it to do, and we collectively wet the bed because AI and apocalypse”.

The attribution of human traits, emotions, or intentions to non-human entities is called anthropomorphism. It has no real place in computer science but that’s not what this really is. There is a theory called the “Three-factor theory” created by a psychologist called Adam Waytz that describes the conditions or predictors of when people are most likely to anthropomorphise. They are:

- Elicited agent knowledge, or the amount of prior knowledge held about an object and the extent to which that knowledge is called to mind.

- Effectance, or the drive to interact with and understand one’s environment.

- Sociality, the need to establish social connections.

When elicited agent knowledge is low and effectance and sociality are high, people are more likely to anthropomorphise.

Mo Gawdat is obviously not a stupid person. You don’t end up Chief Business Officer of Google X if you are stupid. I suspect Gawdat’s “elicited agent knowledge” is not low, but he is part of a group that wants to encourage “effectance” and “sociality” when it comes to AI. It is important to those people who want the public to worship the “God” they claim to be creating that these ideas are established socially, that people form, or at least want to form social connections to devices, and they certainly want these devices to interact with our environment.

Living a life disconnected from other people directly and all interaction through devices, ultimately connecting everyone to the AI “God” is the goal. This is provable nonsense though, and there is a reason why supposedly “smart” people are pushing the AI agenda that is objectively impossible.

Could it be that politicians are seeing their grip on humanity slipping, people’s trust in Governments is at an all time low, despite the number of people still devoted to the system, voting, the COVID scam and all the other trappings of Statism? Maybe this idea, the new “God” of AI, in an age where “computer says no” tends to overrule all common-sense and renders discussion moot, is intended to be the new Deity?

“Don’t blame us!” the Governments will say. “It wasn’t us that decided you all have to be locked away for your own good. It was the AI God, and it’s never wrong. We’re just implementing Its orders. You’re not going to question God now are you?”.

If you think that sounds utterly absurd, you’re right it is, but hearing the likes of Mo Gawdat reported by mainstream platforms like the Times talk about “creating God”, and Anthony Levandowski, the former Google and Uber executive who’s set up a new church called “Way of the Future”, ask yourself how far-fetched it sounds?

Not that these people are correct, and that super-intelligent AI will truly exist and enslave us all, but the rich and powerful who are seeing some resistance to their current schemes to grab total control over everyone and everything might use this too.